Today, AI-powered chatbots handle sensitive customer data, make business decisions, and represent brands. They've become integral to customer service, sales, and support operations across every industry. A single error can damage customer trust, violate compliance requirements and lead to lost revenue.

The good news is that thorough chatbot testing tools can catch issues before your users ever experience them.

TLDR:

-

Modern chatbot testing must handle LLM unpredictability, context memory, and hallucination detection.

-

Thorough testing covers functional flows, performance under load, and security vulnerabilities.

-

Continuous automated testing in CI/CD pipelines catches regressions before users experience them.

-

Cekura provides end-to-end testing and real-time monitoring for LLM-powered chatbots.

What Is Chatbot Testing in 2025?

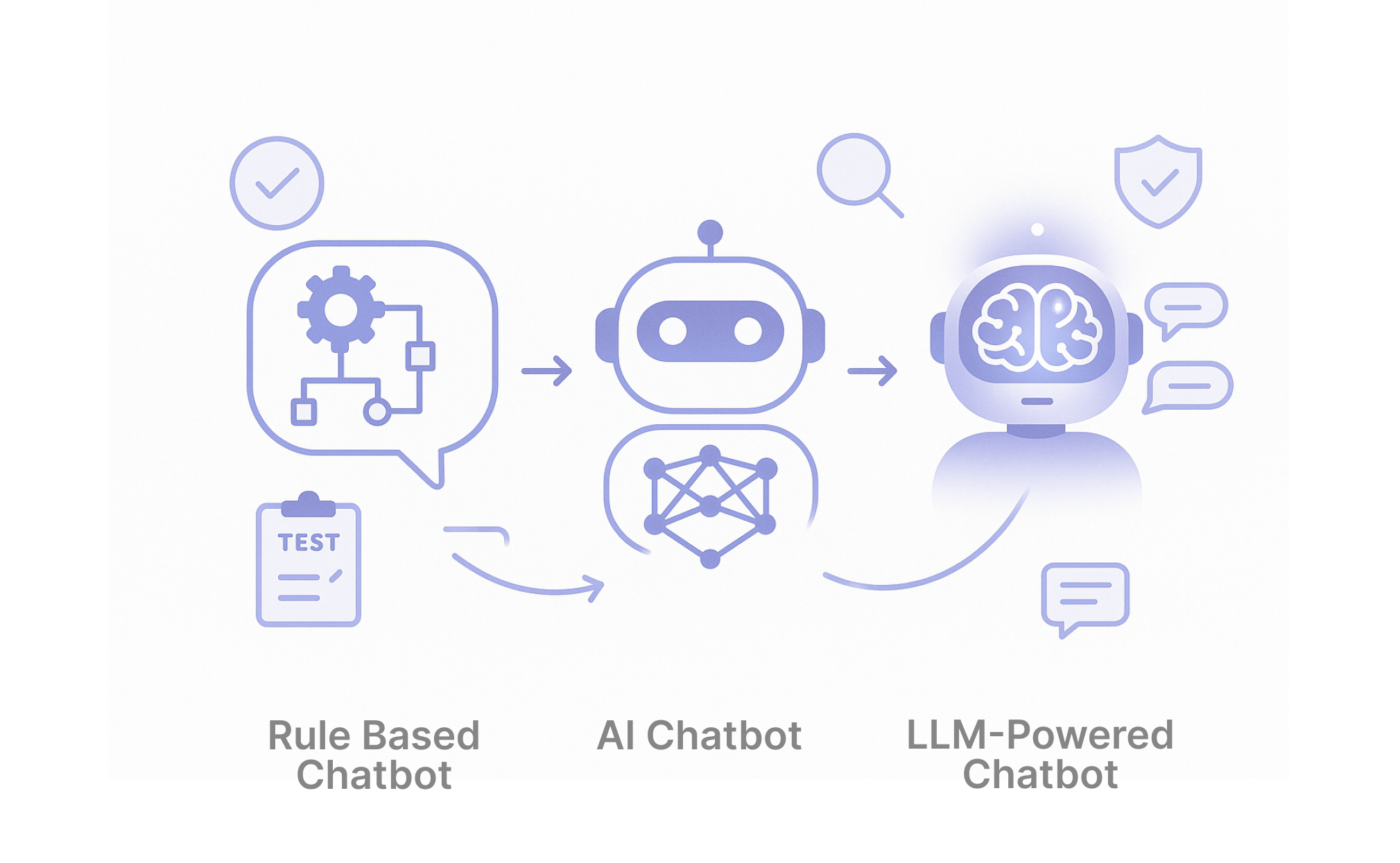

In 2025, there are three distinct types of chatbots that require different testing approaches.

Rule-based chatbots follow simple if-then logic and are relatively straightforward to test.

AI bots use machine learning to understand patterns and respond dynamically.

LLM-powered bots use advanced AI models to generate human-like responses.

Modern chatbot testing is about making sure AI agents behave reliably, follow instructions precisely, and maintain context across complex conversations.

LLM failures are different from failures in traditional software because they are unpredictable. The same input might generate different responses, making standard testing approaches insufficient. You need to validate functionality, accuracy, safety, and adherence to business rules.

Types of Chatbot Testing

Functional Testing

Functional testing makes sure your chatbot performs its core tasks correctly.

Intent recognition testing verifies that your bot correctly identifies what users want, even when they phrase requests differently.

Entity extraction testing confirms that your bot can accurately identify key information like dates, names, order numbers, and product details. If a user says, "I need to change my delivery location for order #12345 to 123 Main Street," your bot must extract the order number and new location correctly.

Business logic testing validates that your bot follows company policies and procedures. For example, if your return policy allows returns only within 30 days, your bot should never approve returns for older purchases.

Performance and Load Testing

Performance testing makes sure your chatbot responds quickly and reliably under different conditions.

Load testing simulates hundreds or thousands of concurrent conversations to identify bottlenecks. Your bot might work perfectly with ten users but crash when handling a hundred simultaneous conversations.

Then you have to consider scalability testing. What happens when your viral marketing campaign increases traffic to your chatbot tenfold?

Backend API performance testing is important because most chatbots integrate with external systems. If your bot needs to check inventory, process payments, or access customer records, those API calls must be fast and reliable.

Memory usage and resource consumption testing can help you optimize costs.

Security and Compliance Testing

Security testing for chatbots involves unique challenges.

Data privacy testing makes sure your bot handles sensitive information correctly. This includes validating that personal data isn't logged inappropriately, shared with unauthorized systems, or retained longer than necessary.

Prompt injection testing is important for LLM-powered bots. Attackers might try to manipulate your bot by injecting malicious prompts that override its instructions.

Compliance testing validates adherence to industry-specific regulations like GDPR, HIPAA, or PCI-DSS.

Red teaming conversational AI involves deliberately trying to break your bot's security measures. This adversarial testing helps identify vulnerabilities before malicious users do.

Authentication and authorization testing makes sure your bot properly verifies user identities and restricts access to sensitive functions.

Advanced Testing Techniques for LLM-Powered Chatbots

LLM-powered bots introduce new failure modes that traditional QA methods can’t catch. To address these, modern testing focuses on context retention, hallucination detection, consistency, and confidence calibration. The table below summarizes these key techniques and their purpose.

| Testing Type | Purpose | Key Metrics |

|---|---|---|

| Context retention | Validates memory across turns | Context accuracy, reference resolution |

| Hallucination detection | Prevents false information | Factual accuracy, source attribution |

| Consistency testing | Provides reliable responses | Response variance, policy compliance |

| Confidence calibration | Manages uncertainty appropriately | Confidence scores, "I don't know" rates |

Chatbot Testing Best Practices for 2025

Effective chatbot testing requires a systematic approach that covers the entire development lifecycle. Here are nine proven strategies that separate successful deployments from costly failures:

-

Test under real user conditions using the actual devices, browsers, operating systems, and network conditions your users experience.

-

Make chatbot testing an integral component of your development and deployment process. Also make testing a standard part of your CI/CD pipeline so every code change triggers automated test suites.

-

Use performance monitoring tools to track key metrics like response time, user engagement, conversation completion rates, and error frequencies. Conversation monitoring metrics provide insights into how your bot performs in production.

-

Implement continuous testing rather than one-time validation.

-

Create diverse test scenarios that cover edge cases and happy paths. Real users behave unpredictably, interrupt conversations, and test your bot's limits.

-

Set clear success criteria and KPIs before testing begins. Define what "good enough" means for response accuracy, conversation completion rates, and user satisfaction scores.

-

Document issues systematically. When problems occur, capture the full conversation context, user intent, and system state, to allow effective debugging and prevention.

-

Automated testing strategies and chat agents should run continuously, including before major releases.

-

Consider using specialized AI conversation quality tools that understand the unique challenges of conversational AI testing.

Making testing continuous, rather than a one-time activity, is key. Automated AI agent evaluation allows you to run these tests whenever you update your bot's training data, prompts, or business logic.

Regression Testing and Continuous Evaluation

As chatbots evolve, updates to prompts, logic, or models can unintentionally break existing conversation flows. Regression testing ensures these updates do not cause errors or inconsistent behavior. Automated test suites replay key conversation scenarios and compare results against expected outputs to confirm that essential workflows, such as order lookups or password resets, still function correctly.

Continuous evaluation extends this process into production. By tracking metrics like response accuracy, completion rates, and user satisfaction, teams can detect performance drift early. Integrating these tests into CI/CD pipelines keeps quality consistent and prevents regressions from reaching users.

Test Data Design and Coverage

Comprehensive chatbot testing depends on realistic and diverse test data. Combining real anonymized transcripts with synthetic variations helps capture how users actually speak, including slang, typos, and incomplete messages. This ensures the chatbot performs well under real-world conditions rather than ideal ones.

Coverage should include transactional requests, open-ended questions, and multi-turn conversations that test context memory. Tracking which intents and entities have been validated prevents blind spots and ensures even complex workflows are tested reliably. Well-structured test data makes it easier to measure accuracy, detect weak points, and maintain overall conversation quality.

How Cekura Simplifies Chatbot Testing

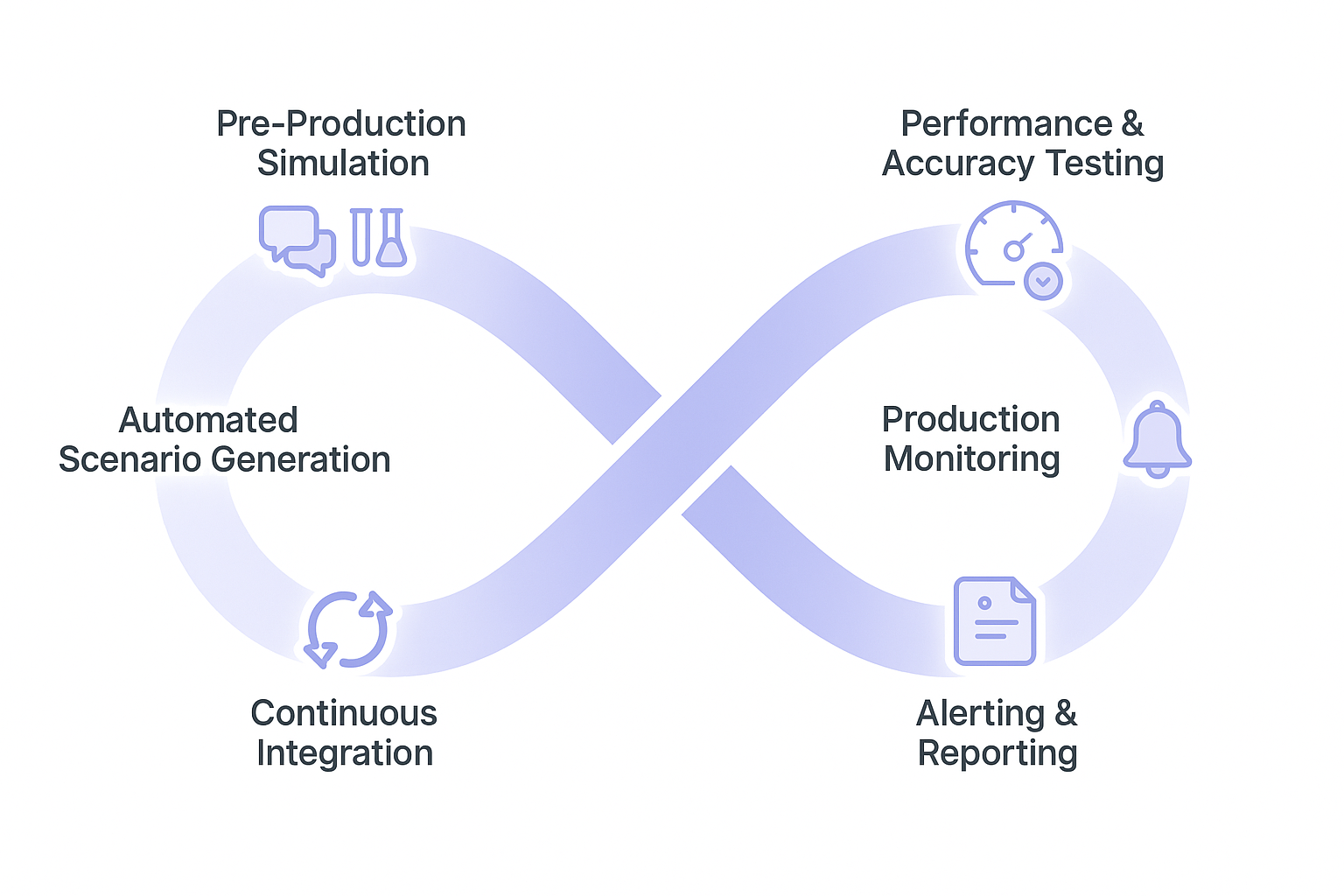

Cekura provides end-to-end testing and observability designed for modern conversational AI systems. We understand the unique challenges of maintaining accuracy, reliability, and context retention in LLM-powered chatbots.

Our platform covers the complete lifecycle from pre-production simulation to live monitoring. We run detailed simulations across diverse personas and scenarios to test how your chatbot handles different user intents, phrasing styles, and edge cases.

Production monitoring goes beyond basic uptime checks. Cekura continuously validates instruction-following, tool call accuracy, and conversational quality in real time. When issues occur, you receive immediate alerts with full context for rapid resolution.

Automated scenario generation produces thousands of test cases across both common and adversarial situations. This ensures comprehensive coverage and exposes issues that manual QA often misses.

Our testing framework simulates large-scale chat interactions to measure response accuracy, latency, and context handling under realistic conditions. This unified approach removes the need for separate testing tools and helps teams maintain consistent quality as systems evolve.

Cekura integrates seamlessly with existing development workflows, triggering automated tests on code changes and delivering detailed reports on conversation quality, accuracy, and performance metrics.

Teams using Cekura detect issues before they affect users, reduce regression risk, and gain deep visibility into chatbot behavior and performance.

Our recent fundraising success and partnership with Cisco demonstrate the market's recognition of our complete approach to chatbot quality assurance.

FAQ

What should I include in my chatbot testing checklist?

Your checklist should cover pre-deployment validation, user experience testing, security audits, and performance benchmarks.

When should I run automated tests for my conversational AI?

Run automated tests continuously throughout development. Integrate testing into your CI/CD pipeline so every code change triggers test suites, and implement ongoing production monitoring to catch regressions.

How can I prevent chatbot hallucinations?

Implement factual accuracy testing against your knowledge base, source attribution validation, and confidence calibration testing to make sure your bot expresses uncertainty when appropriate. Use consistency testing to verify that your bot provides the same answers to equivalent questions asked different ways.

Final Thoughts on Complete Chatbot Testing Strategies

The complexity of modern LLM-powered bots demands specialized approaches that go far beyond traditional software testing. Quality chatbot testing tools help you catch hallucinations, validate context retention, and make sure your bot follows business rules. With the right testing framework in place, you can deploy knowing your AI agents will deliver reliable, trustworthy conversations.